In a stark demonstration of artificial intelligence’s potential for misinformation, a computer-generated photograph recently caused significant disruption to railway services across the United Kingdom. The incident highlights the growing challenges faced by infrastructure managers in distinguishing between authentic visual documentation and sophisticated AI imagery.

The AI Image Incident

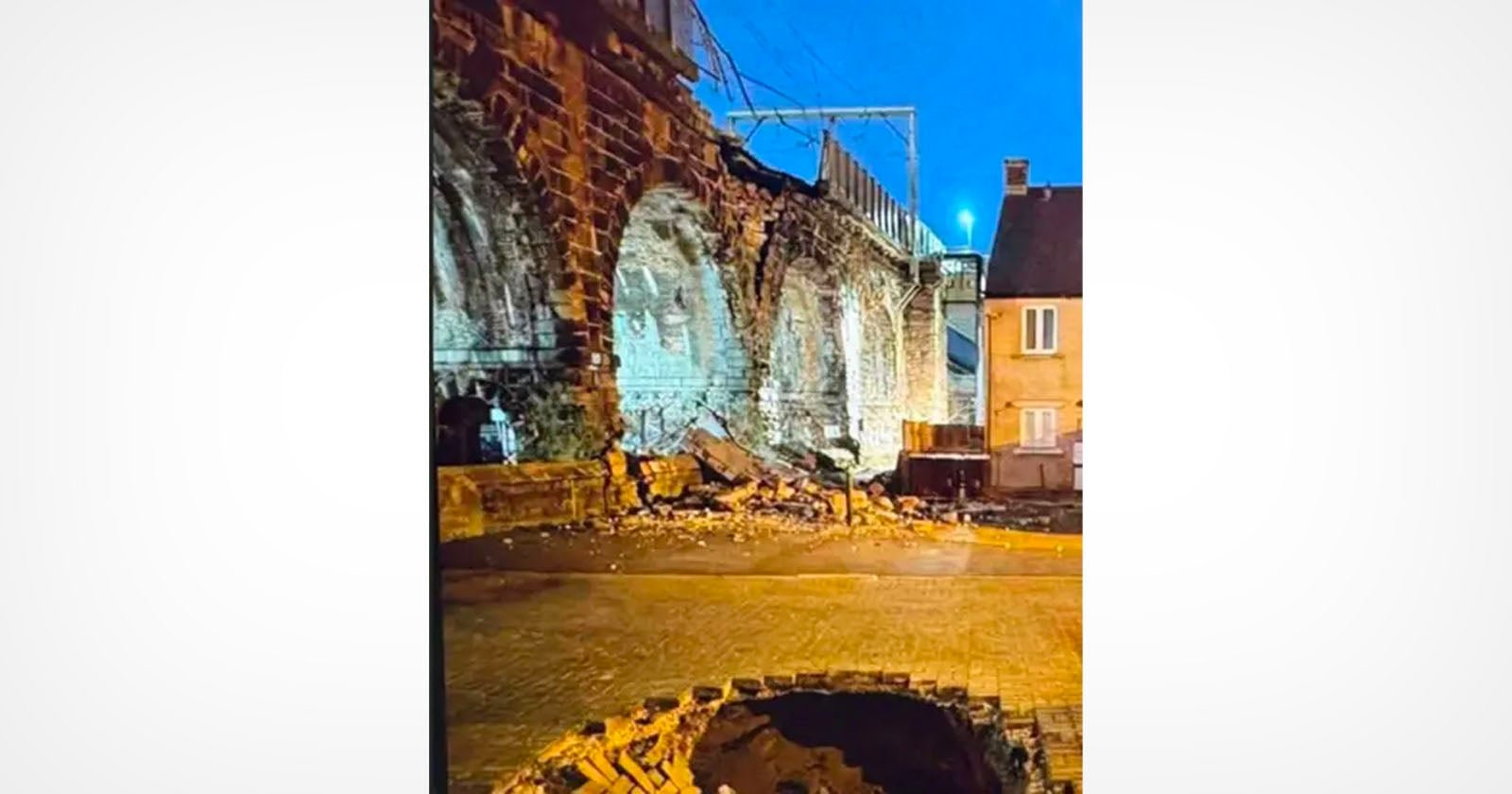

Transportation authorities were forced to halt train services after an artificially generated image depicting apparent structural damage to a critical railway bridge circulated online. The hyper-realistic digital rendering prompted immediate safety protocols, compelling officials to conduct a comprehensive physical inspection of the infrastructure.

This event underscores the increasing sophistication of generative AI technologies, which can now produce visually convincing images that can easily mislead viewers and decision-makers. Professional image forensics experts are increasingly concerned about the potential for such digital fabrications to trigger unnecessary operational disruptions.

Technological Implications

The incident serves as a critical case study in the emerging challenges of visual verification in the digital age. As AI image generation capabilities continue to advance, organizations must develop robust verification mechanisms to distinguish between authentic documentation and computer-generated representations.

Key Takeaway: The railway service interruption demonstrates the real-world consequences of increasingly realistic artificial intelligence image generation technologies.